The exhibition project Design Depths of Industrial Design Studio, Faculty of Multimedia Communications, Tomas Bata University in Zlín.

This experimental project incorporates an innovative approach to industrial design and builds on past experiences, specifically the ModificAI project, which was presented at Bratislava Design Week in 2023.

Design Depths responds to this year’s central theme—relationships. These relations are showcased through visual connections between objects from various design fields, such as furniture, consumer goods, and automotive design. The project aims to depict relationships on several levels.

The work of an industrial designer is deeply connected with the effort to find relationships, often hidden, between the various criteria that a well-designed product must meet. These are relationships between form, function, psychological, economic, and other equally important factors that influence the design process. The Industrial Design Studio at FMK UTB in Zlín has long emphasized practical relationships with prominent Czech and global companies, which has been consistently reflected in numerous successes in international competitions. The Design Depths project aims to explore the relationships between objects in latent space, a direction opened by new advances in artificial intelligence.

A key component of the Design Depths project is the DeepMDF experiment, which utilizes machine learning and expands on the concepts of the previous ModificAI project.

DeepMDF enables the exploration of latent space, a space of relationships between objects that are not immediately apparent to human observers. Machine learning reveals new possibilities for industrial design, whether by generating alternative solutions for practical applications or in the realm of speculative design. DeepMDF will be showcased at BADW through an audiovisual projection, accompanied by data visualizations and analyses that reveal relationships between the examined products. Each product category displayed will be complemented and represented by physical models or scale implementations.

The selection of physically presented works reflects the studio’s focus on collaboration with industry and includes award-winning projects that have received prestigious design awards such as the Red Dot Design Award , European Design Award and the Czech National Design Award.

DeepMDF

The DeepMDF project expands upon the previous ModificAI installation, first introduced at the 2023 Bratislava Design Week. This year's theme, relationships, is explored through visual connections across different design domains, from furniture to automotive. The project uses machine learning to uncover latent relationships between objects, which may not be obvious to human observers. It demonstrates these relationships on multiple levels, including between human and AI-generated art, form and function, and entirely different product categories. DeepMDF opens new avenues in both practical industrial design and speculative design theory.

The exploration of the latent space allows the discovery of hidden connections, which inspire innovative design variations. This multi-dimensional space fosters the creation of experimental solutions, expanding the boundaries of conventional design thinking. The project offers both practical implications, such as the generation of alternate variations, amalgamations and a theoretical dimension through speculative design, which encourages deeper contemplation of the role AI/ML could play in future of creative design processes.

By visualizing these complex relationships, DeepMDF demonstrates how AI can influence not only the aesthetic dimensions of design but also its functional aspects. The relationship between human creativity and AI-generated results is explored as a dynamic interplay, with potential applications across industries. Through this project, the team continues to investigate the boundaries between machine learning and industrial design, pushing forward the discourse on how AI might shape future design paradigms.

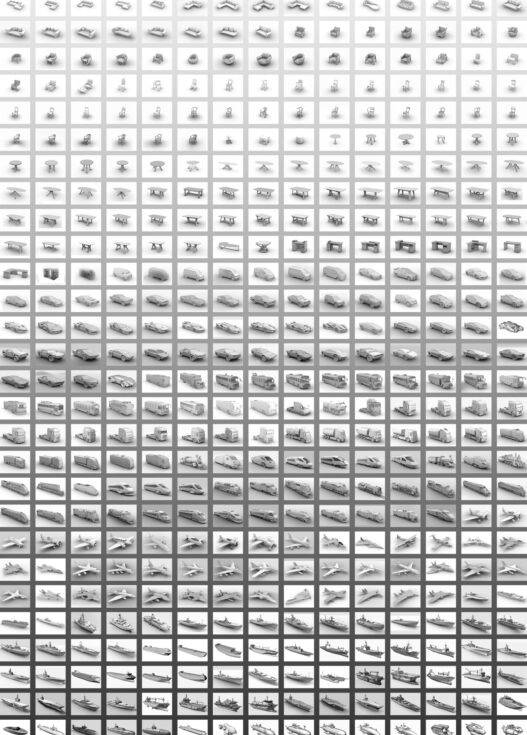

Input data preparation

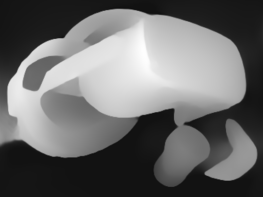

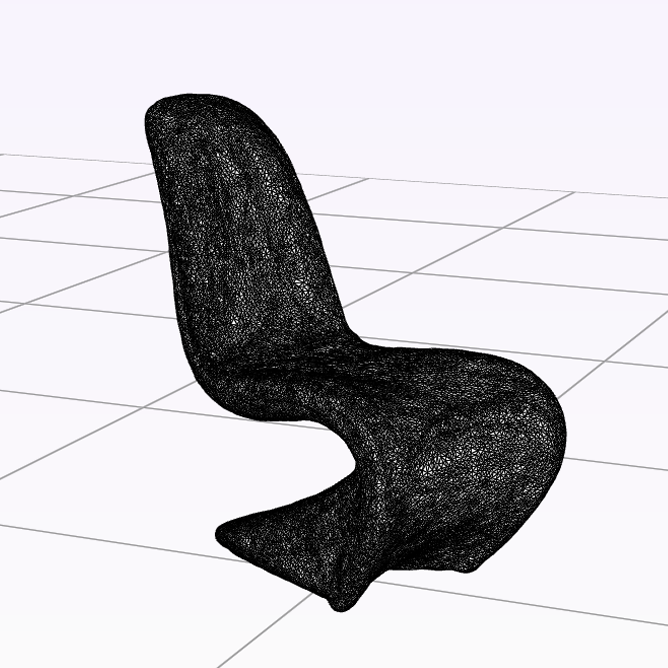

The input dataset for the DeepMDF project was created using 3D modeling software Rhinoceros. The visualizations featured a monochromatic color scheme on a white background with a preset camera position. Each product section was represented by various objects, which were then utilized in experimental research to explore novel forms within the dataset. The project's methodology enabled the investigation of object relationships, facilitating the generation of new design solutions through the combination and variation of existing forms, pushing the boundaries of design possibilities.

This approach taps into the flexibility of Rhinoceros to generate clean, highly controlled visual outputs, optimizing the way forms are analyzed across different sections. By keeping the aesthetic simple, the experiment focused on pure form, reducing distractions from color or texture. Each object from a specific product section, such as furniture, automotive, or consumer goods, was intentionally chosen to represent a unique shape language. These objects served as "building blocks" in further experiments with form generation, using techniques like blending, variation, and interpolation or combination to produce modified new shapes.

Through the manipulation of these visual elements, the project explored hidden relationships between objects that aren't immediately apparent to the human eye. The dataset allowed for identifying unique intersections of shapes and functions that traditional design methods might overlook. The idea of latent space is crucial here—it refers to the uncharted territory where new forms arise from known objects by tweaking parameters or mixing features, creating innovative designs while retaining a sense of connection to the original dataset.

This methodology emphasizes algorithmic exploration, where technology aids designers in discovering and generating forms that combine the familiar with the unexpected. Such experiments not only reveal practical variations for design but also push speculative boundaries, questioning how far we can stretch existing design languages using AI and machine learning techniques.

The /blend function in MidJourney enables users to experiment with new forms by combining up to five images. This blending process mixes and rearranges the input data, allowing the creation of unique, hybrid designs. When products from different categories are blended, it raises interesting questions about how these categories interact. This method generates intermediary forms that sit between the original images, opening opportunities to explore new, innovative shapes based on the combination of various design elements from different fields.

The resulting hybrid set of images (both original and synthetized) served as the foundation for generating interpolation between keyframes. This process enabled smooth transitions between individual objects and their design variants, leading to new forms and shapes that extend beyond the original task. The interpolation between images demonstrates the capability of artificial intelligence to create creative connections between different designs, utilizing machine learning techniques to generate innovative visual solutions.

Another area of exploration focused on the relationship between text and image. A key question was what combination of keywords and modifiers a prompt should contain to generate the desired visualization. Using the /describe function in MidJourney, various object descriptions were tested, such as a view of a 3D model of a minimalist white sofa on a white background, emphasizing details and different angles. This analysis provided better insight into how textual descriptions influence the resulting visualizations.

example of reverse analysis of image to text with /describe:

- Sofa, white background, perspective view, simple and clean, 3D model rendering, high-definition texture details --ar 4:3

- Sofa, white background, isometric view, no shadow on the ground, white sofa with two armrests and one back cushion, simple shape, high quality, high resolution, 3D rendering, white background, isometric perspective, top-down angle, high definition --ar 4:3

- A 3D white sofa with a simple and minimalistic design, set against a white background. The image is taken from a top-down perspective, with no shadows or projections visible. The sofa has three soft cushions and is empty, without any objects or additional furniture inside. It is a single-seat design with armrests on both sides, and an extra-long chaise lounge on the right end. --ar 4:3

- Sofa, white background, isometric view, no shadow on the ground, 3D rendering, white color theme, minimalist style, high resolution, high detail, high quality, high resolution, high details, high contrast, bright colors, simple and clean design, white background, isometric view, no shadows, high-resolution --ar 4:3

Based on prompts above ChatGPT was utilized for generating new prompts. This approach allowed to explore different combinations of keywords and phrases into process of exploration and generating of new variations.

Visual representation of t-SNE (t-distributed StochasticNeighbor Embedding) is a machine learning technique used for dimensionality reduction, primarily for visualizing high-dimensional data. It maps similar data points closer together in a lower-dimensional space while maintaining local relationships. This technique is particularly useful in visualizing complex datasets, allowing for intuitive exploration of relationships and patterns within the data.

The following video showcases the journey through the latent space of machine learning and industrial design, illustrating how these fields intersect and contribute to innovative design processes. This exploration highlights the potential of machine learning techniques in generating creative solutions and transforming traditional design paradigms.

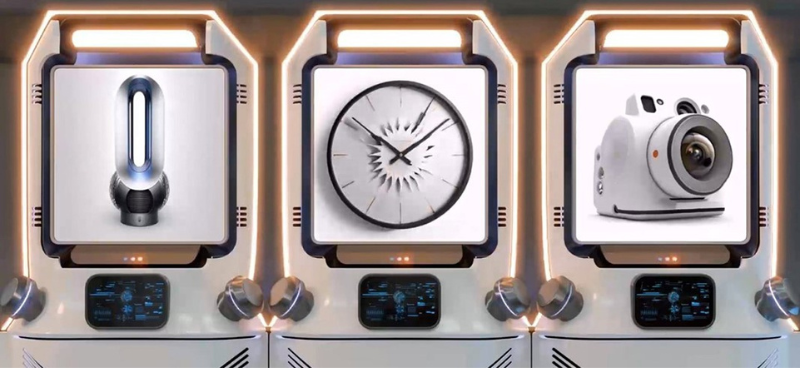

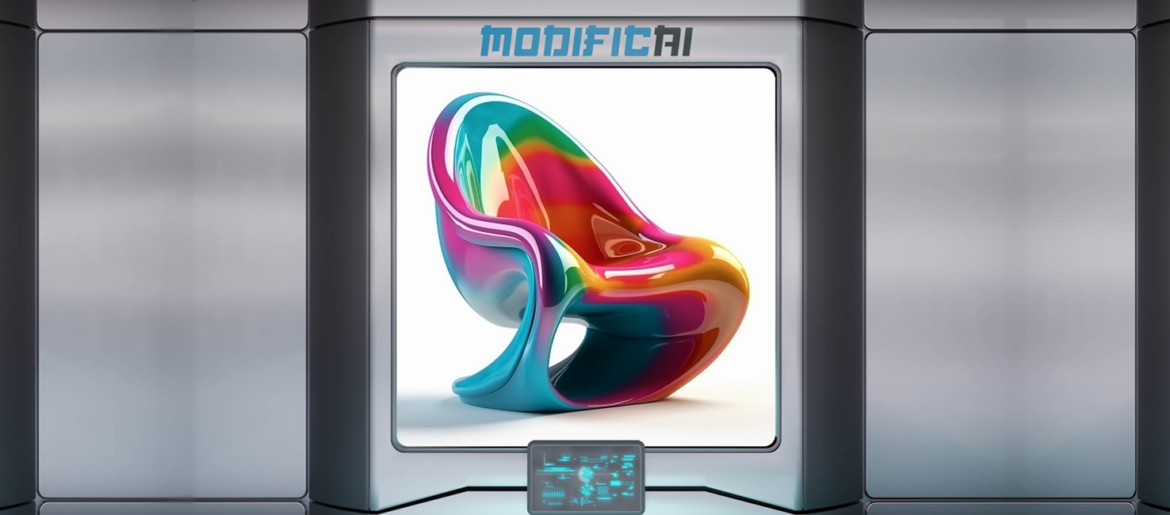

ModificAI 1.0

ModificAI conceptually represents an imaginary machine that is able to create and vary different designs of objects. It is an audiovisual projection for which 18 different, iconic products from the field of industrial design were selected. These products were subsequently varied using artificial intelligence tools in such a way that part of their original identity was preserved, but at the same time their design language, materials and other characteristics changed significantly. The projection itself consists of 3 ModificAI machines, which display the gradual changes of individual products from the original design towards increasingly distant variants. For a smooth transition between individual objects, neural networks were used to calculate interpolation, so that the objects really seem to transform each other.

The main goal of the project was to show the current possibilities of artificial intelligence generative tools and their rapid development over time. The project was implemented at the beginning of 2023, when tools such as MidJourney - which was used within the project - began to provide the first truly relevant and believable results. The author's goal was to show not only how we as designers can use artificial intelligence tools as sources of inspiration, but also how the use of artificial intelligence could look like in a few years, when time and technology have advanced again. The author's effort was to create an experimental project on the border between industrial, graphic and digital design and map to what extent it is possible to believably vary designer products and then create a smoothly animated transition between them in a fully automated way.

ModificAI 1.0 was first presented at the Slovak University of Technology in Bratislava as part of the multidisciplinary platform Ad: creative centre, Pechtle.mechtle. This event showcased the project's innovative approach to design and artificial intelligence, merging creative disciplines to explore new ways of generating visual outputs using AI-driven methods

Interpolations between pairs of images were created using the FILM (Frame Interpolation for Large Motion) tool by Google research. The FILM tool uses deep learning techniques to generate interpolated frames between two input images, specifically designed for large motion scenarios. By analyzing the motion and context of the images, it creates smooth transitions that enhance video fluidity and visual coherence. This advanced framework leverages deep learning to generate smooth intermediate frames, effectively managing large movements and enhancing the overall fluidity of the video sequence.

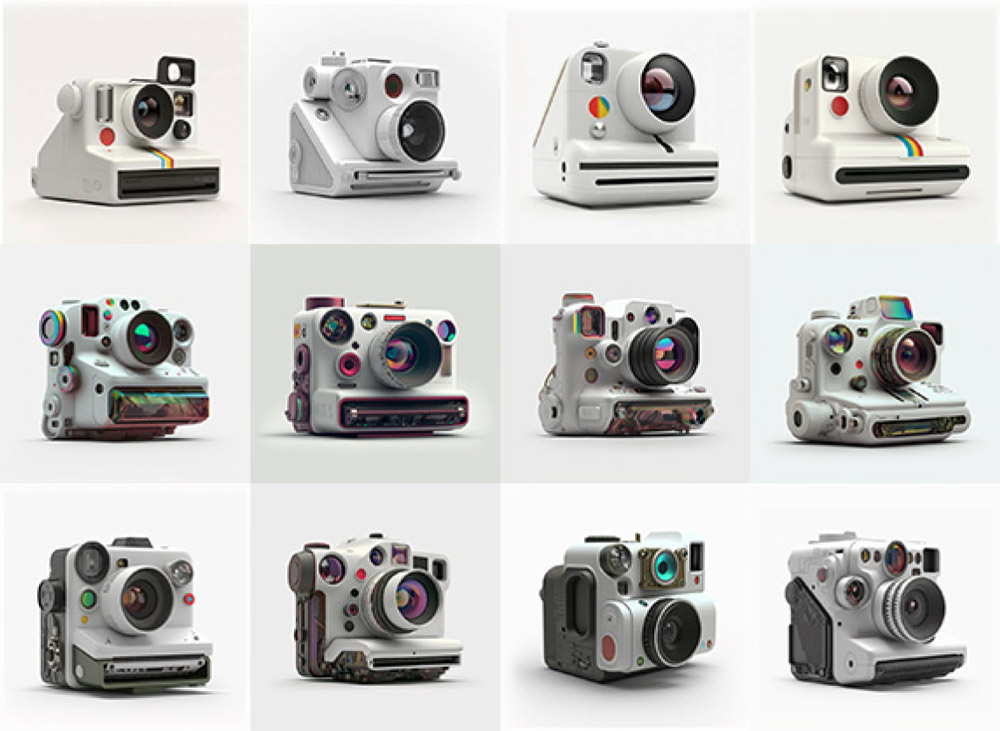

The generated outputs showcase various product forms and their exploration of shapes, with each product represented by numerous generated variants. The visual quality of the images reflects advancements in image generation based on text prompts using Midjourney version 4, illustrating the tool's capabilities in creating diverse and creative design solutions.

ModificAI 2.0

The project Dialogue Diffusion Design of Industrial Design studio at Tomas Bata University in Zlin, presented a vision of the futuristic machine ModificAI 2.0. This machine generated variant visual solutions for selected iconic products that have significantly influenced the direction and development of industrial design, often shaped by the emergence of new technologies. It demonstrated that we need not fear the use of such technologies. The exhibition also featured projects focused on progressive technologies like virtual reality, parametric design, and machine learning. Products such as space stations, buildings, vehicles, and smaller items were presented through virtual reality, alongside physical models of selected design proposals.

The Dialogue Diffusion Design focused on exploration of natural language usage for image generation through text-to-image models. By investigating how linguistic input can influence visual output, the project aimed to enhance the understanding and application of generative design techniques. This approach not only showcases the capabilities of modern AI technologies but also encourages experimentation with new state-of-art tools tools in industrial design.

ModificAI 2.0 focused also on exploring new photorealistic visuality and the coherence of the Midjourney v5 model. Unlike ModificAI 1.0, which projected three images simultaneously, ModificAI 2.0 featured only one screen for its presentation. The research also presents a comparison of results from different versions of the generative model, providing insights into the advancements in visual representation and the effectiveness of the technologies employed.

ModificAI 3.0

The authors continued their experimentation with new model versions for generating visual content in the ModificAI 3.0 project. The new MidJourney 5.0 version is characterized by a higher degree of prompt coherence and understanding, leading to visually impressive results. ModificAI 3.0 enabled broader experimentation with diverse design concepts. It was featured as part of the DENTITY exhibition, which was successfully presented at MILANO DESIGN WEEK 2024.

Within ModificAI 3.0, a large set of visual outputs was created, which were subsequently interpolated and connected into a final video. This process involved transitioning between different design variants, smoothly merging the generated visuals into a cohesive visual narrative, demonstrating the capability of AI-driven design exploration.

As part of the IDENTITY project, a dedicated website and publication were created to present the project's outcomes. These platforms served to showcase the design processes and research. The publication provides further insights into the topic of Identity.

Artificial intelligence offers an unconventional and innovative perspective

"The iconic products that have helped shape the unique identity of industrial design. It explores how these objects might have evolved in a different epoch, in the hands of a different designer, different brand or with the help of different technology. By means of text-to-image processes the AI generates new variations of the products while partly preserving their original visual identity."

— Vit Jakubíček, curator of the Identity project

The final video served as an engaging presentation of the project's ability to synthesize and evolve iconic product designs into innovative forms.

The installation later returned to Zlín for AFTER MILANO at ZLÍN DESIGN WEEK, where a new version of ModificAI was introduced.

The final output of ModificAI 3.0 featured a machine design created using traditional graphic methods. This design was integrated with the AI-generated visuals from the project to demonstrate a fusion of human creativity and machine-generated content.

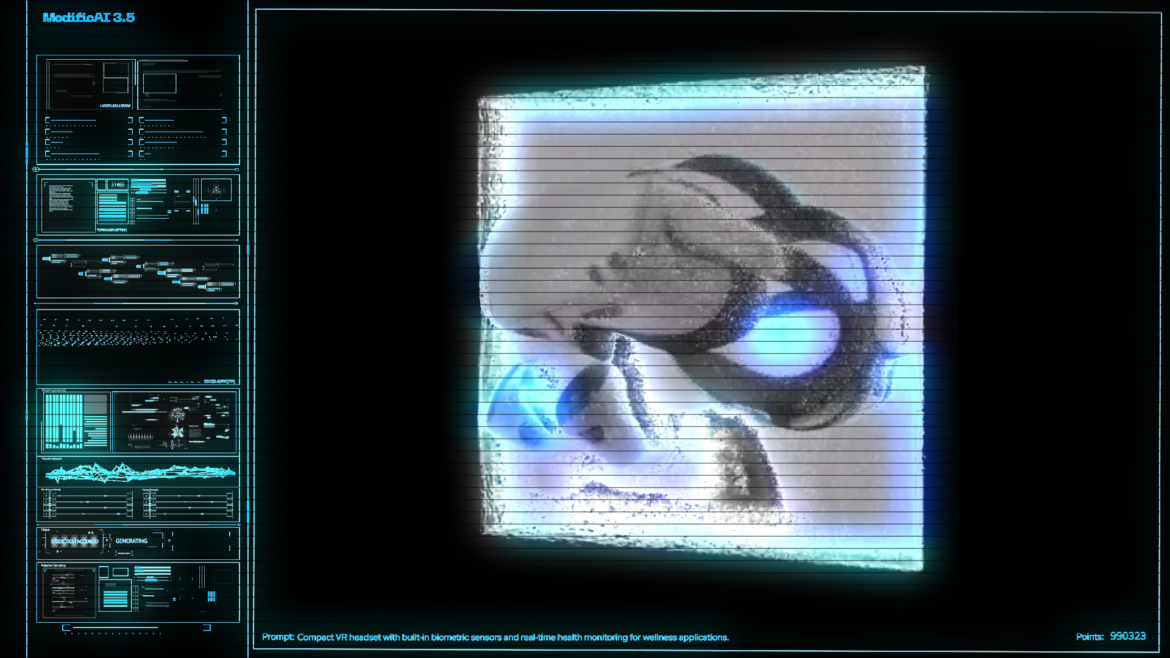

ModificAI 3.5

ModificAI 3.5 - Zlín Design Week - IDENTITY: after Milano 2024 - The Faculty of Multimedia Communications at Tomas Bata University in Zlín presented the exhibition Identity at Zlin Design Week, which had been showcased at Milan Design Week in mid-April. The vernissage IDENTITY: after Milano 2024 took place on Thursday, May 9, in the Elements studio, Zlín, Czech Republic.

For this event, a new version of ModificAI 3.5 was developed, which focused on expanding the toolset for interpolation, morphing, and fluid transformation of objects. This iteration allowed for more flexible manipulation of shapes and designs, enhancing the experimental capabilities of the project and enabling the creation of more complex and dynamic visual outputs.

Wiggle stereoscopy with depth maps creates a 3D illusion by shifting an image between two perspectives, simulating depth perception. In this case, the effect is achieved using TouchDesigner, a visual development platform, based on a script from B2BK. The process involves reverse engineering of visual data, with AI generating prompts based on screen analysis. This approach enhances the ability to manipulate images and create dynamic visual effects through both 3D depth mapping and AI-powered automation. Version 3.5 was created in two color variations: white and black. It also featured a more complex pseudo user interface (UI) designed to enhance functionality and aesthetic coherence.

The black version of 3.5 was used for experimentation with holographic projection using the Pepper's ghost imaging principle. This technique involves creating the illusion of three-dimensional, floating objects by reflecting images onto transparent surfaces, enhancing the immersive experience.

ModificAI 4.0

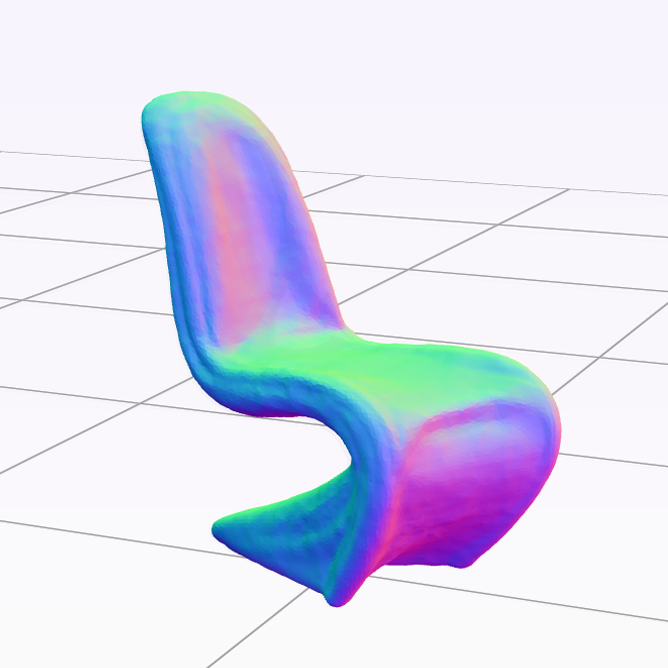

ModificAI 4.0 represents a significant advancement towards integrating 3D data into the design process. Unlike earlier versions that focused on 2D image generation, ModificAI 4.0 utilized visual outputs from previous iterations as input to produce detailed 3D models. These models were converted into the PLY format, with each point cloud comprising up to 1 million points, enabling detailed and complex representations. Morphing between forms was accomplished through point trajectory interpolation, allowing for seamless transitions between shapes, enhancing the exploration of design variations in a fully immersive 3D space.

This approach offers new possibilities in industrial design, enabling the study of fluid transformations and smooth morphing between different forms and concepts.

These examples emphasize the potential of converting 2D images into 3D representations, allowing rapid ideation / exploration of design concepts.

The pipeline for ModificAI 4.0 was designed to automate object rotation and camera orbiting, in tandem with morphing of 3D objects. This setup allows for the smooth transition of shapes while rotating the objects automatically. With the integration of suitable input interfaces, peripherals, and sensory technologies, it could enable users to control the 3D objects via gestures. This system offers a more dynamic and interactive experience for manipulating designs in three-dimensional space, enhancing user engagement in exploring digital objects.

MetaModificAI

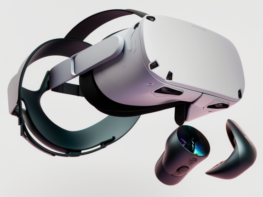

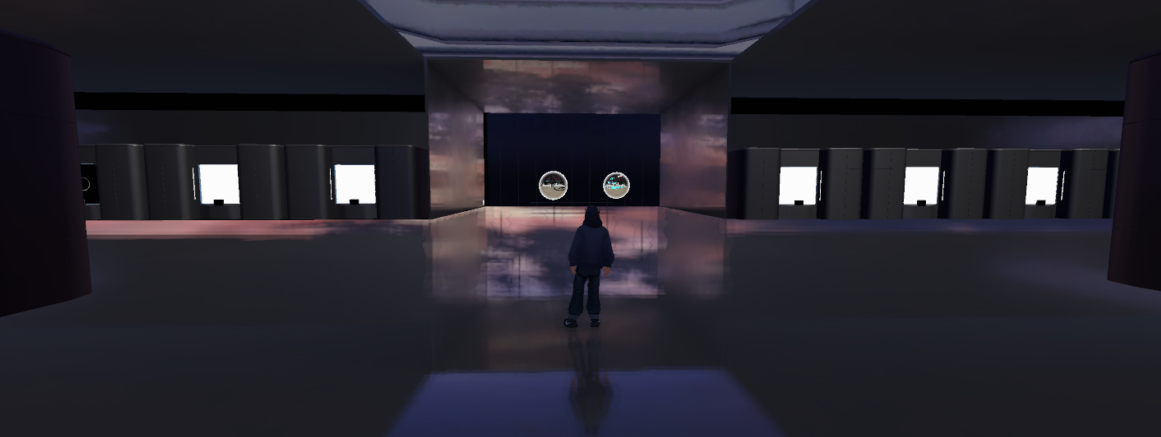

MetaModificAI was part of Zlín Design Week 2024 under the project BEYOND, showcasing three interconnected metaverse galleries. The exhibition, held in a KOMA module at Náměstí Míru, Zlín, from May 8 to May 14, 2024, highlighted collaborations with AI in 3D modeling. Posters displaying students' visions of the future and virtual sculptures reflected the intersection of art, design, and AI. The exhibition was accessible both in person and online via devices like laptops, mobile phones, and VRheadsets, promoting a global, shared experience.

MetaModificAI was part of Zlín Design Week 2024 under the project BEYOND, showcasing three interconnected metaverse galleries. The exhibition, held in a KOMA module at Náměstí Míru, Zlín, from May 8 to May 14, 2024, highlighted collaborations with AI in 3D modeling. Posters displaying students' visions of the future and virtual sculptures reflected the intersection of art, design, and AI. The exhibition was accessible both in person and online via devices like laptops, mobile phones, and VR headsets, promoting a global, shared experience.

MetaModificAI showcased a comparison of different projection versions, allowing analysis of visual outputs. A significant innovation of the project lies in its VR presentation capabilities, which enhanced the experience by enabling immersive exploration of the designs in virtual reality. This format not only provided greater depth in viewing the design projections but also demonstrated the potential of VR as a tool for displaying intricate and complex models, unrestricted by the limitations of traditional display methods or physical environments.

The metaverse gallery is an innovative way to present design as it transcends the limitations of physical spaces, allowing for dynamic and immersive visualization. Complex mechatronic designs, which may be challenging to exhibit in traditional environments, can be fully realized in a virtual space. Designers are not restricted by physical laws or material constraints, enabling them to explore ambitious ideas. The gallery format allows for interactive experiences, where users can engage with 3D models, simulations, and other media in ways impossible in conventional exhibitions.

Design Depths was created by Martin Surman, Jakub Hrdina, Štěpán Dlabaja, and Ondřej Puchta.

ModificAI 1.0 - 3.0 was developed by Jakub Hrdina, Štěpán Dlabaja, and Valeryia Truskavetska.

From ModificAI 3.5 to DeepMDF, the project was led by Jakub Hrdina and Štěpán Dlabaja.

List of physically exhibited student works at Design Depths:

Veronika Chovančíková, Štěpán Dlabaja, Diana Hreusová, Adéla Jakubeková, Michal Juráň, Jan Kaděra, Bára Kolondrová, Vendula Kramářová, Marek Ondřej, Tomáš Skřivánek,

Sabina Stržinková, Michal Štalmach, Valeryia Truskavetská, Vladimír Vykoukal and others.

Graphic: Jakub Hrdina

The ModificAI and DeepMDF project is an interdisciplinary initiative that emerged from interfaculty collaboration between the Faculty of Multimedia Communications and the Faculty of Applied Informatics at Tomas Bata University in Zlín.

We would like to thank our partner Paketo.cz for their support of the project.

© 2024 Tomas Bata University in Zlín